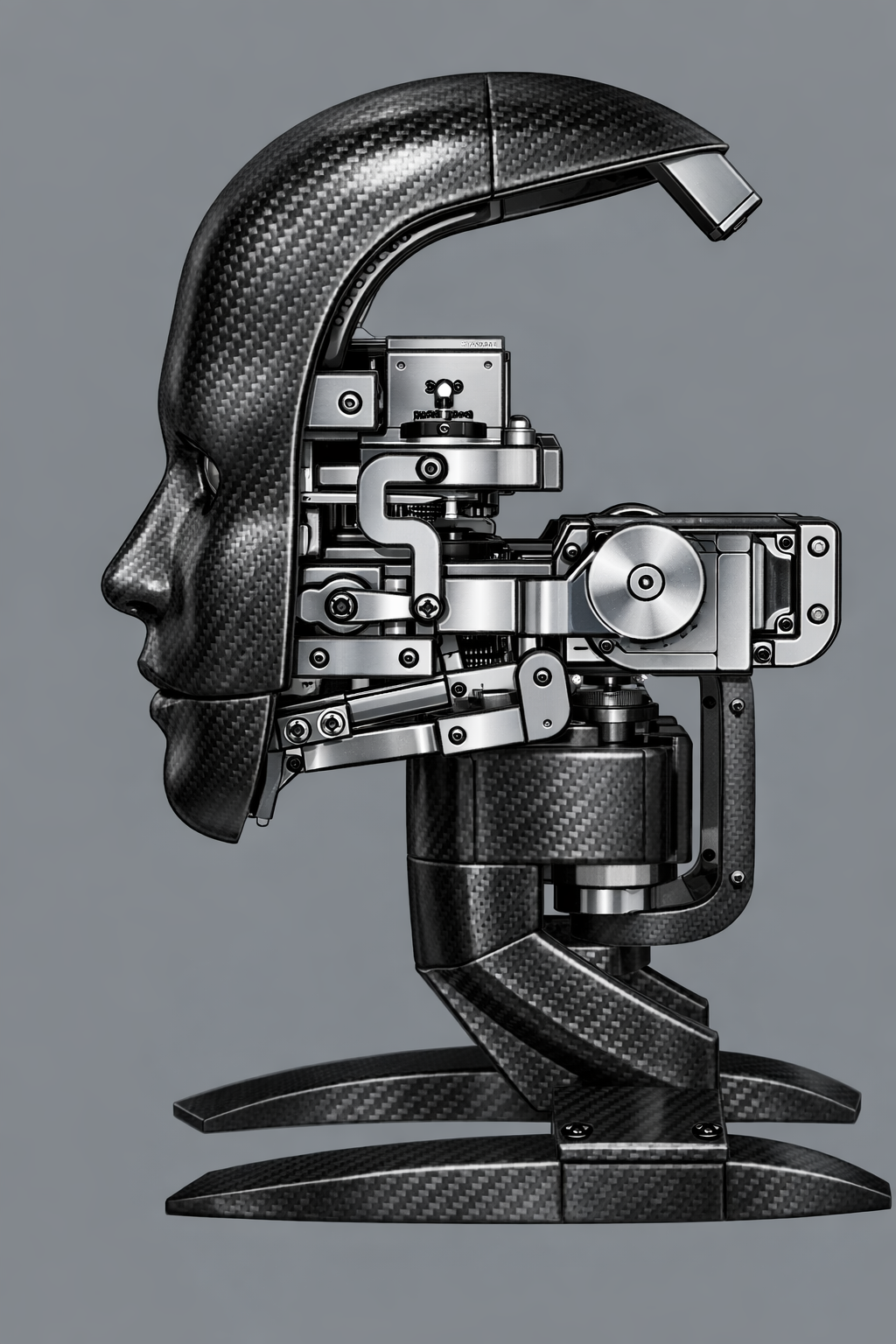

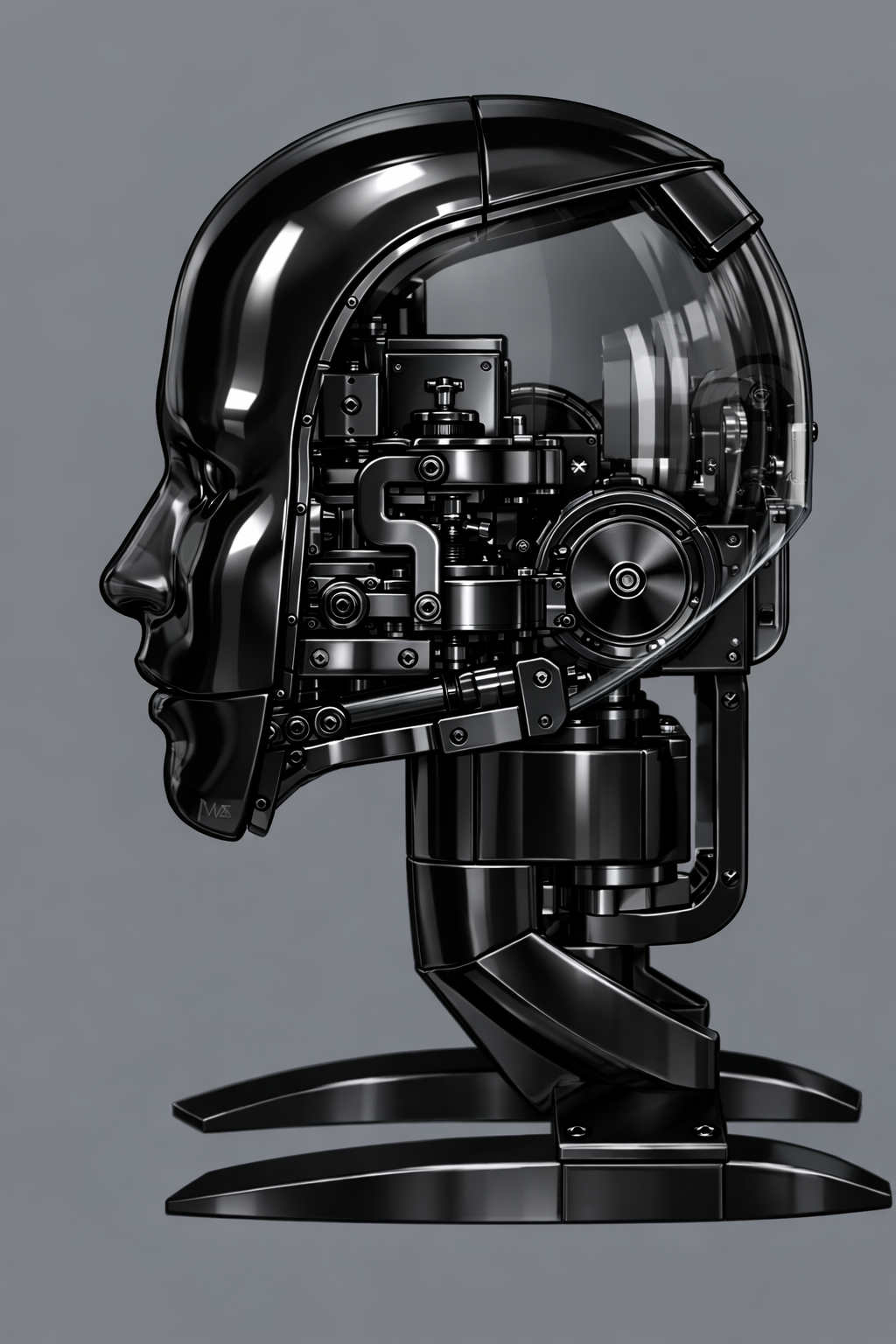

Engineering

5 Servos

Audio

Pi 5

Expression

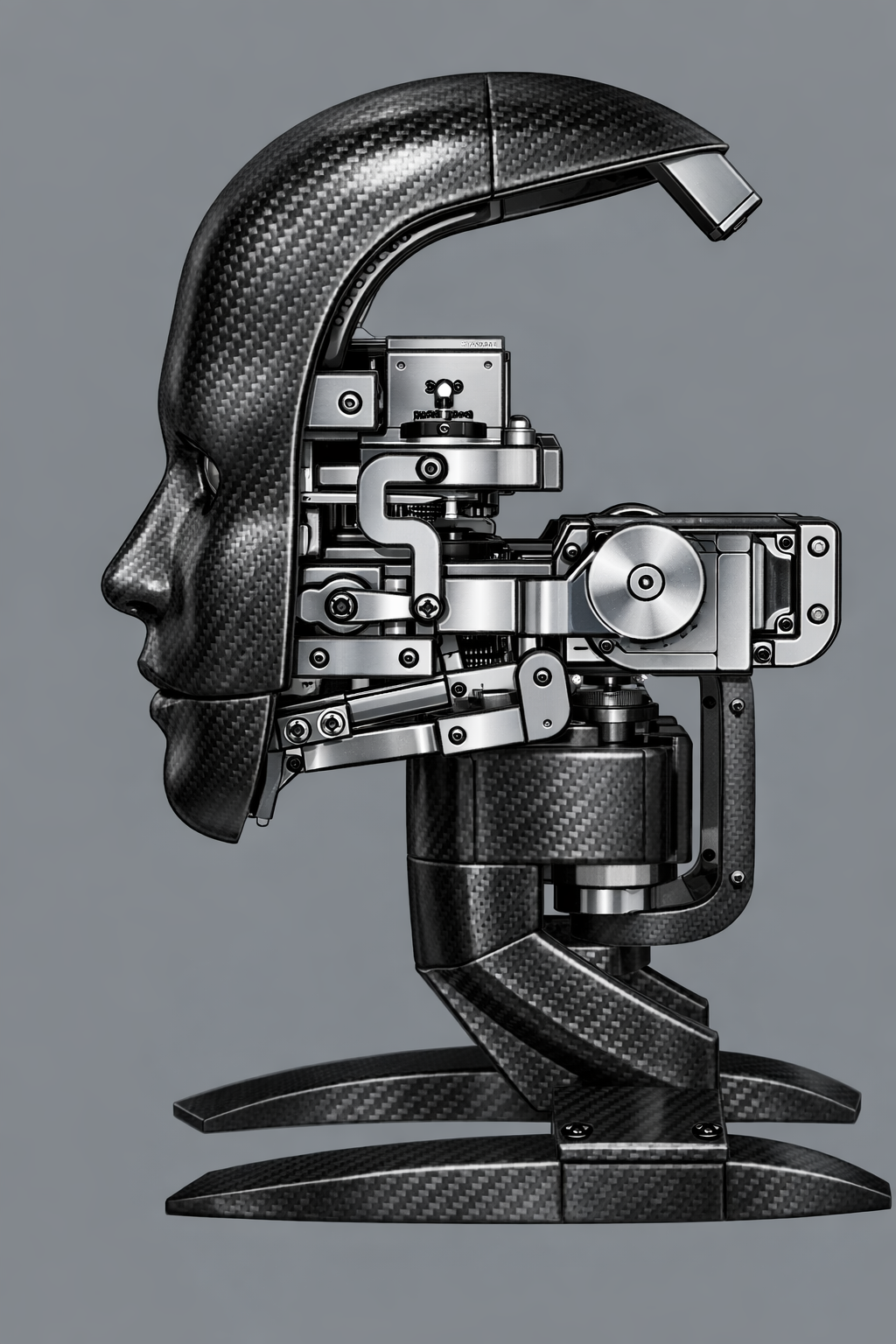

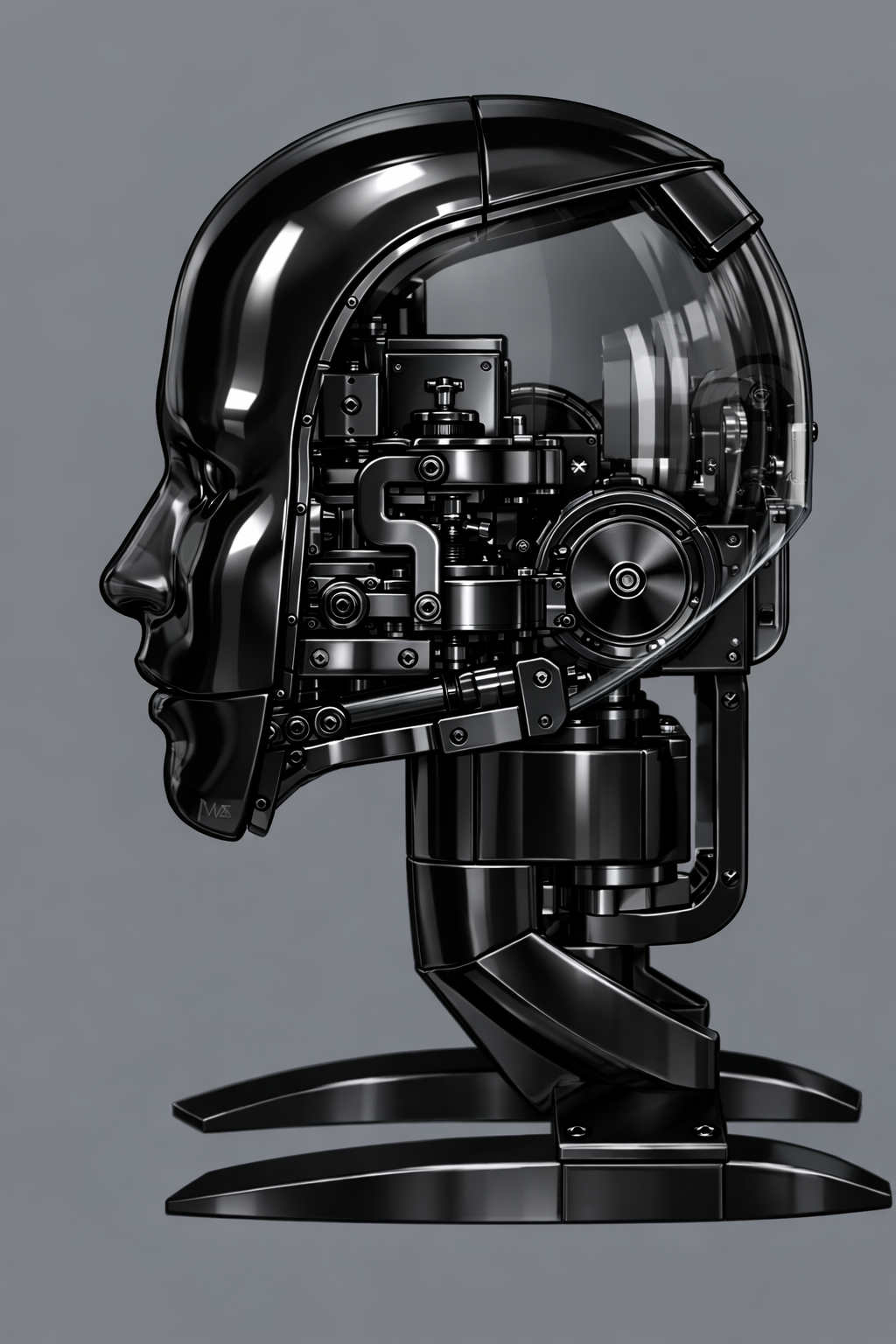

Lifelike facial mechanics driven by synchronized servos.

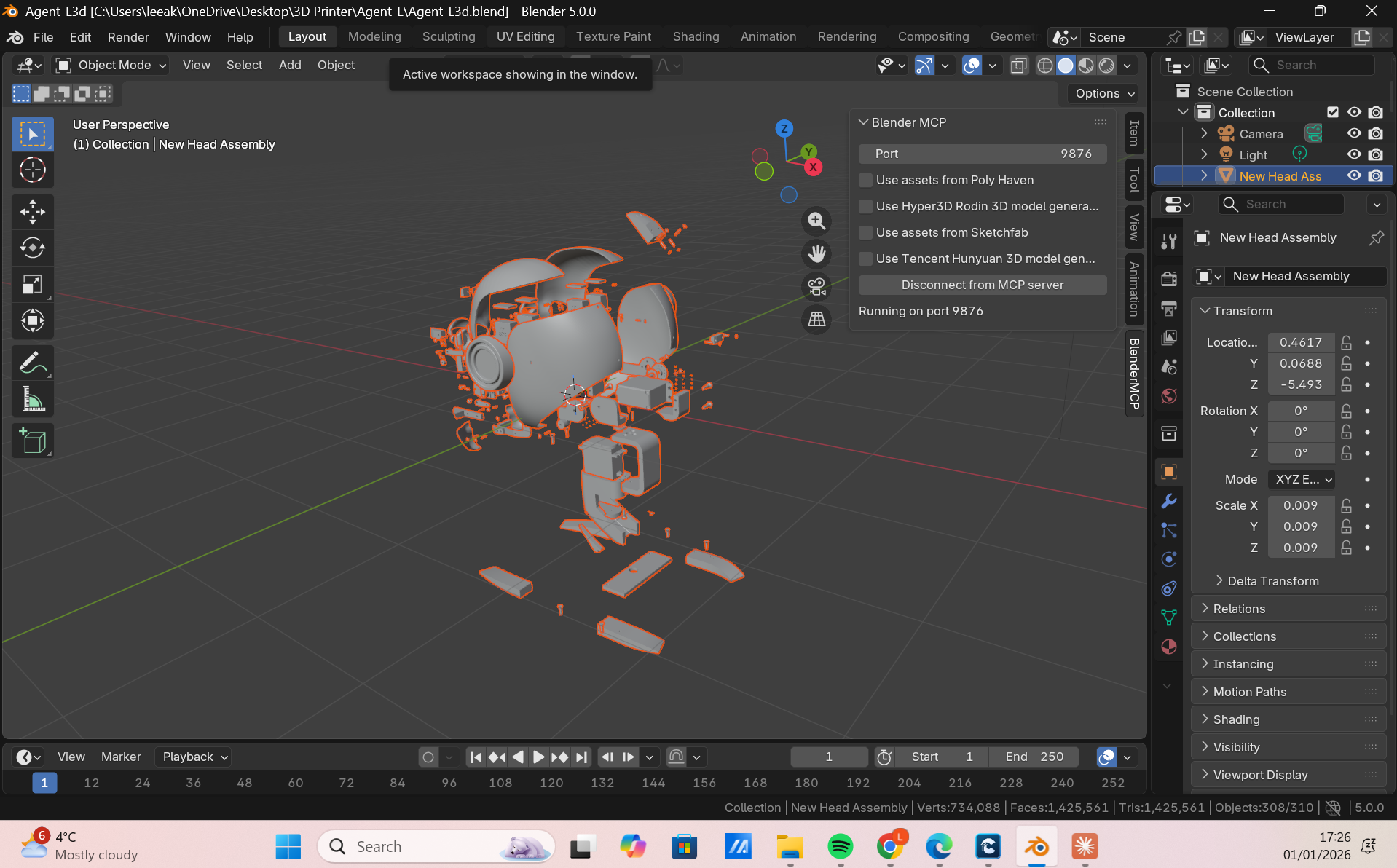

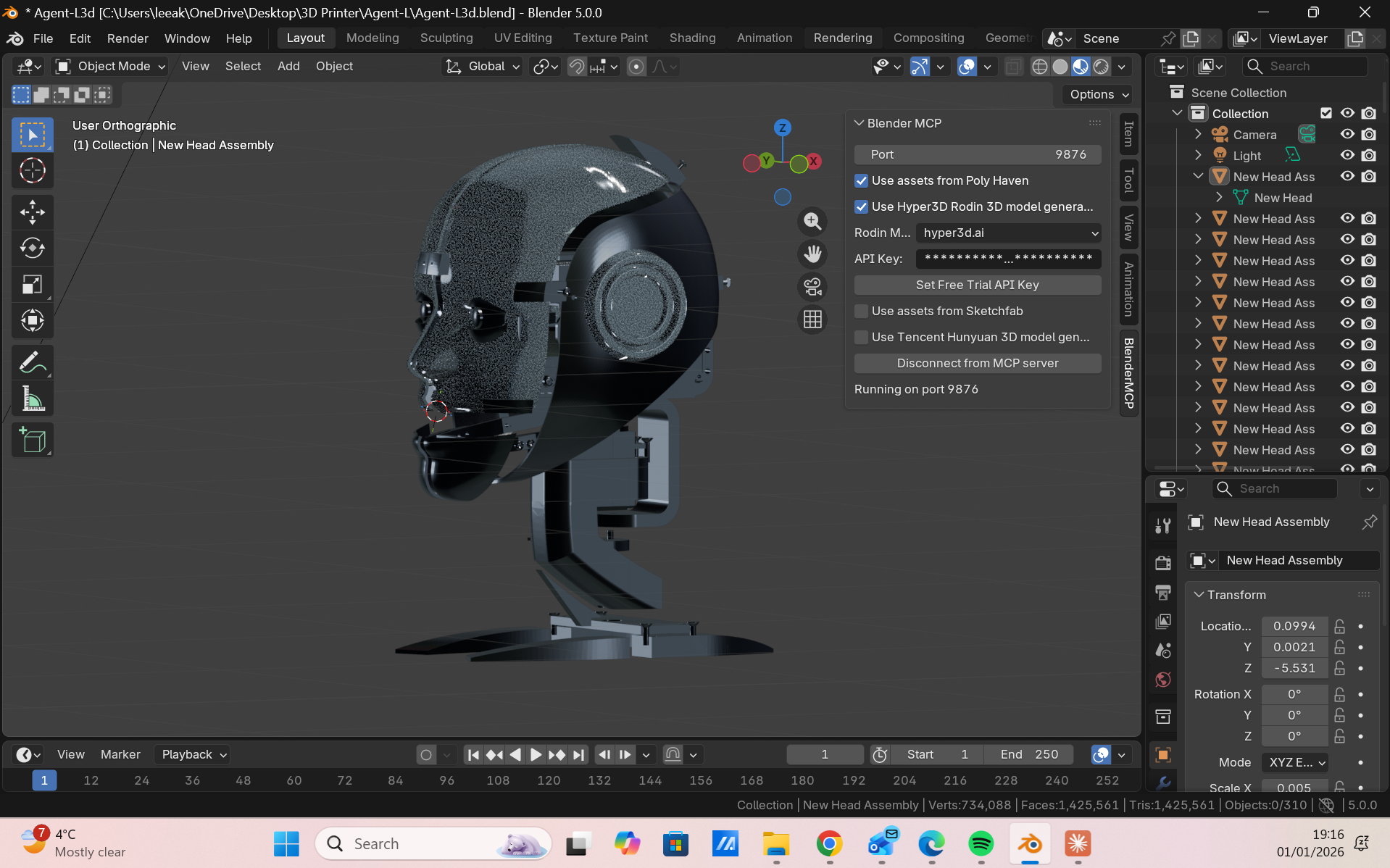

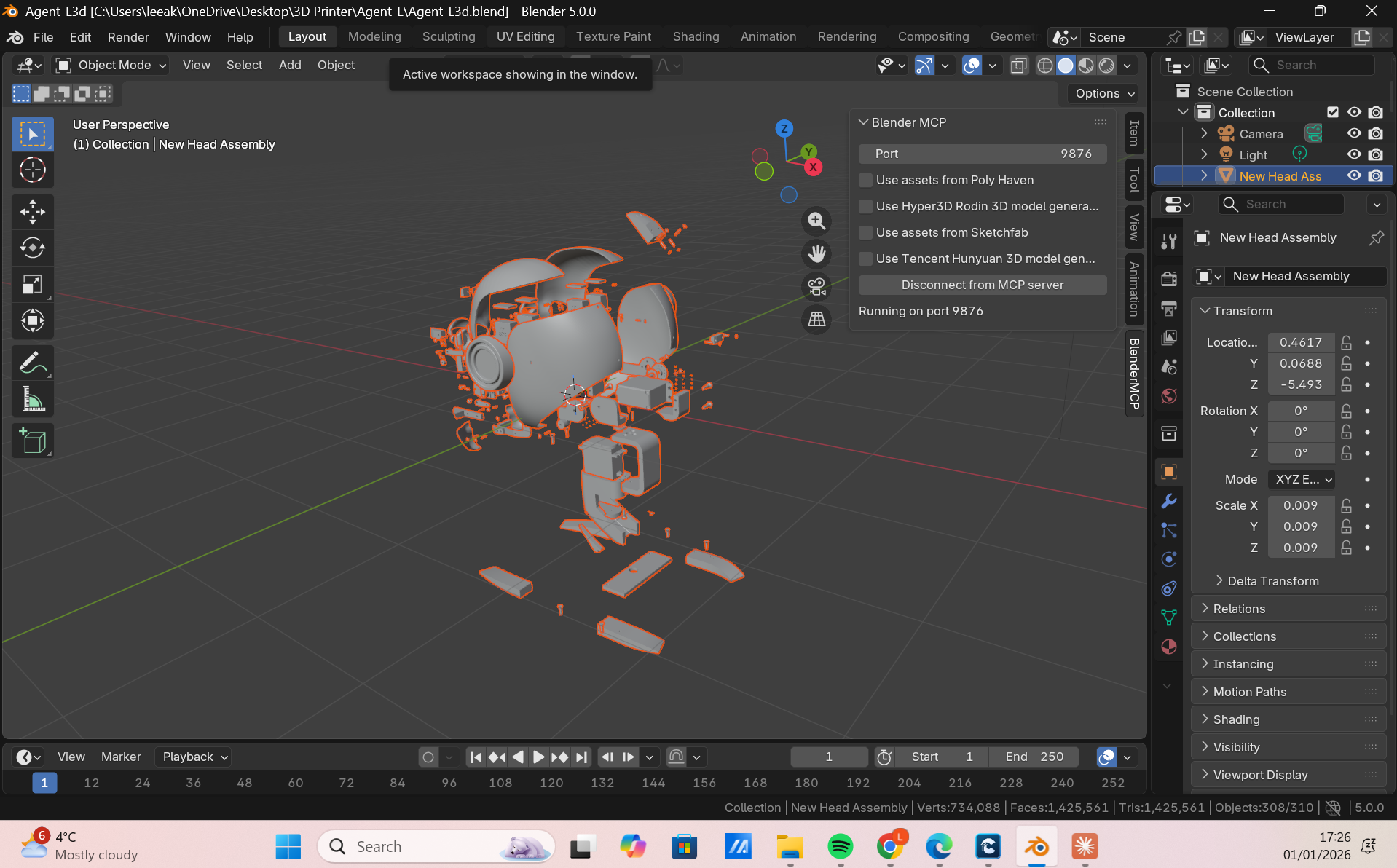

Manufacturing

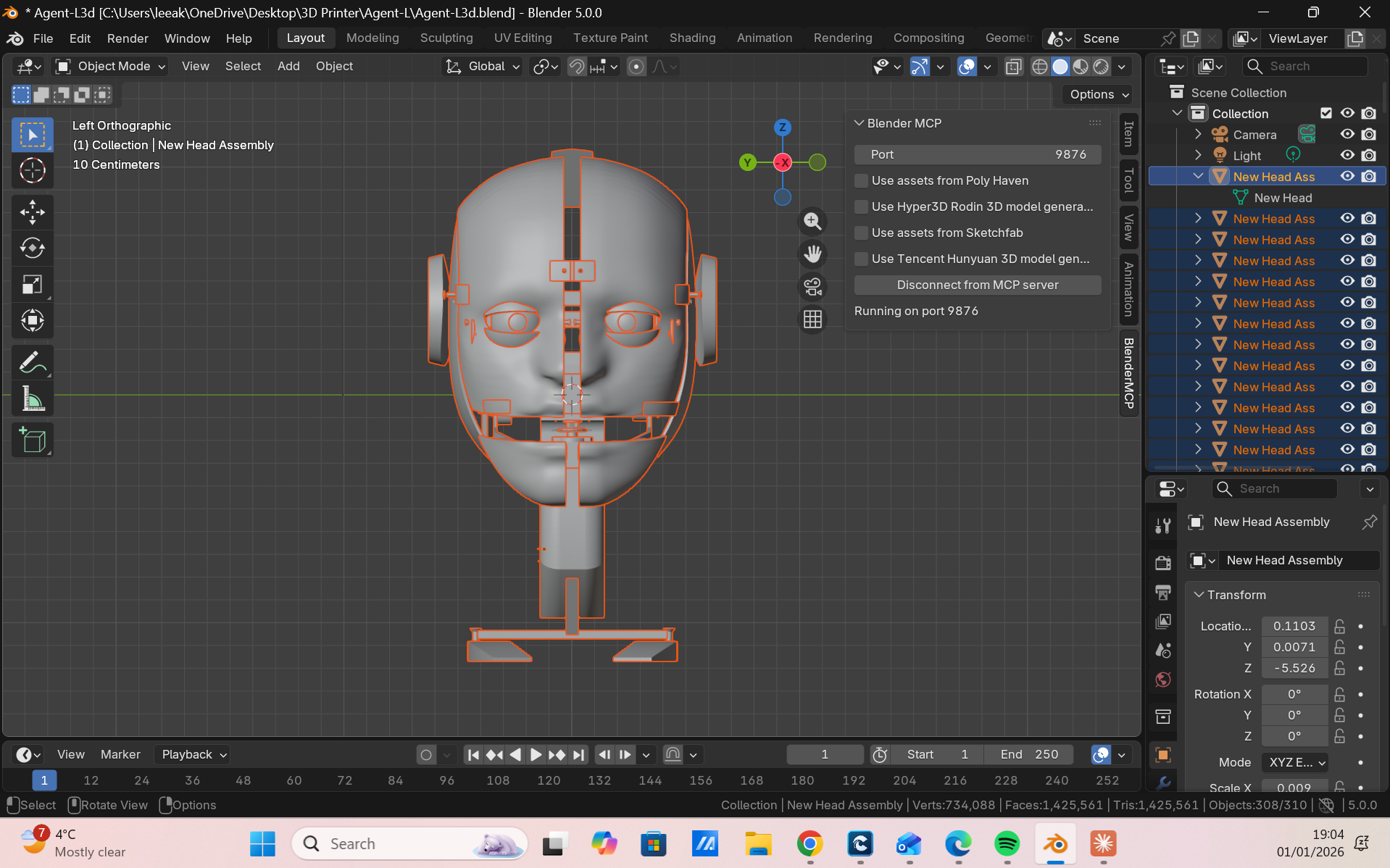

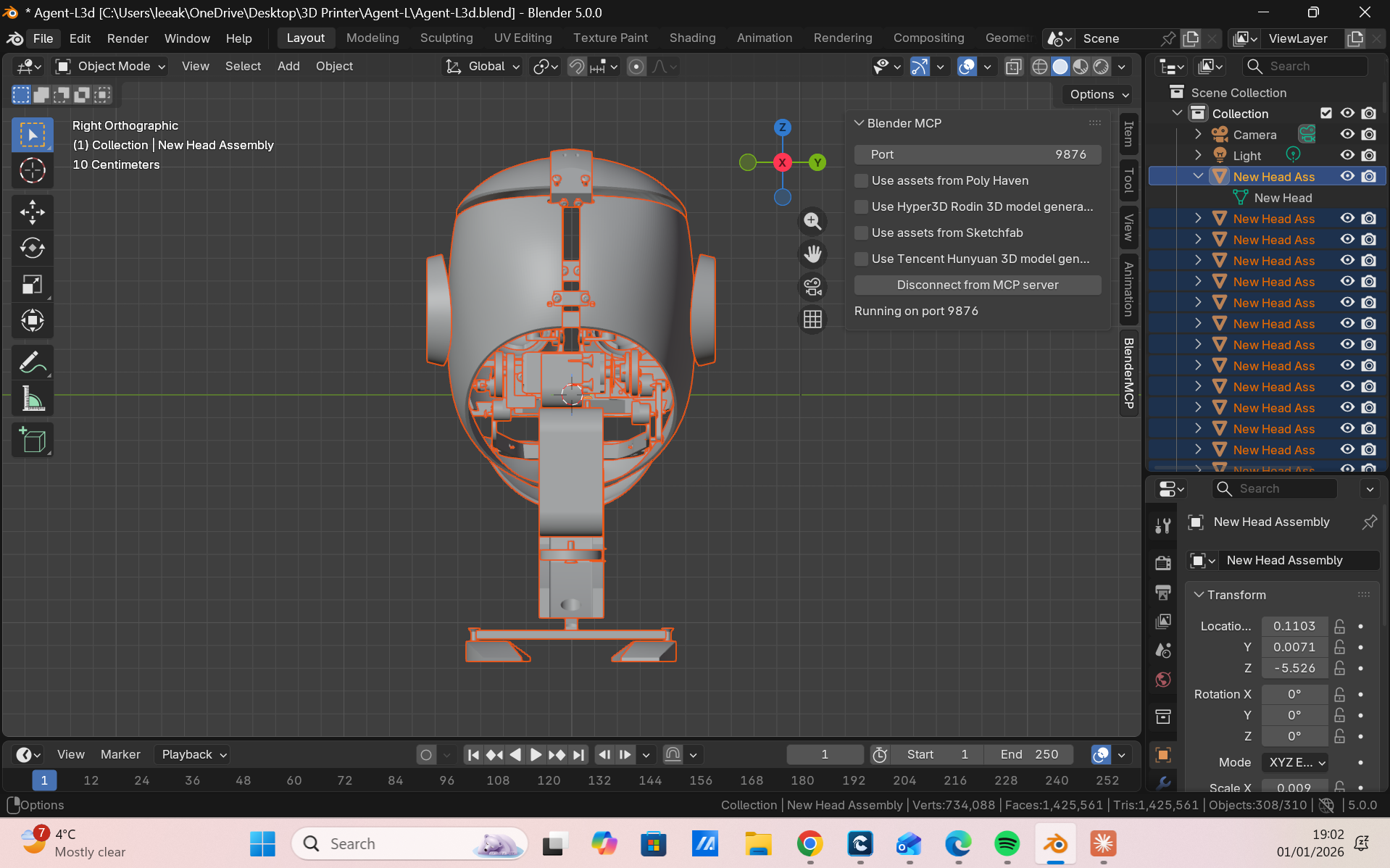

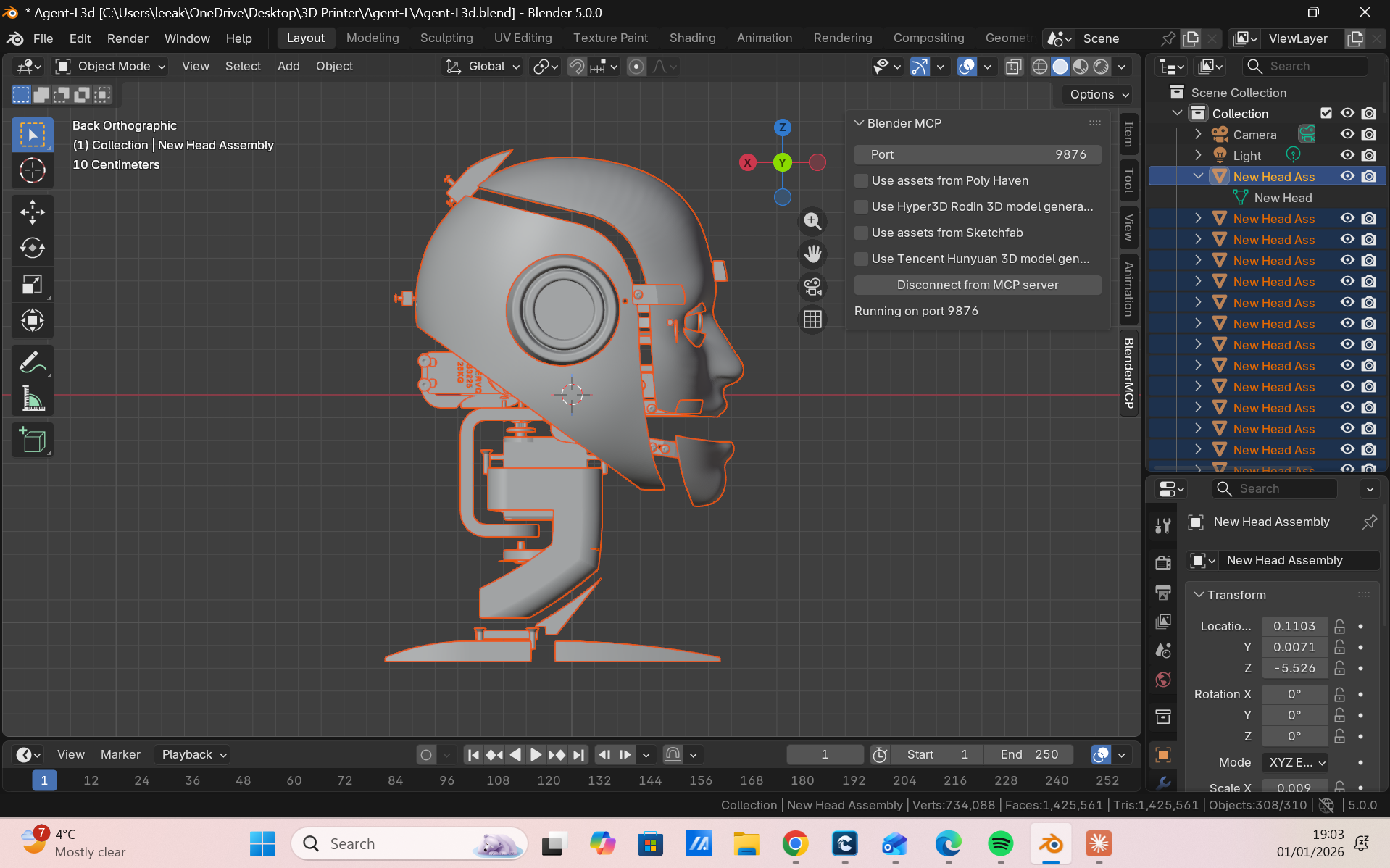

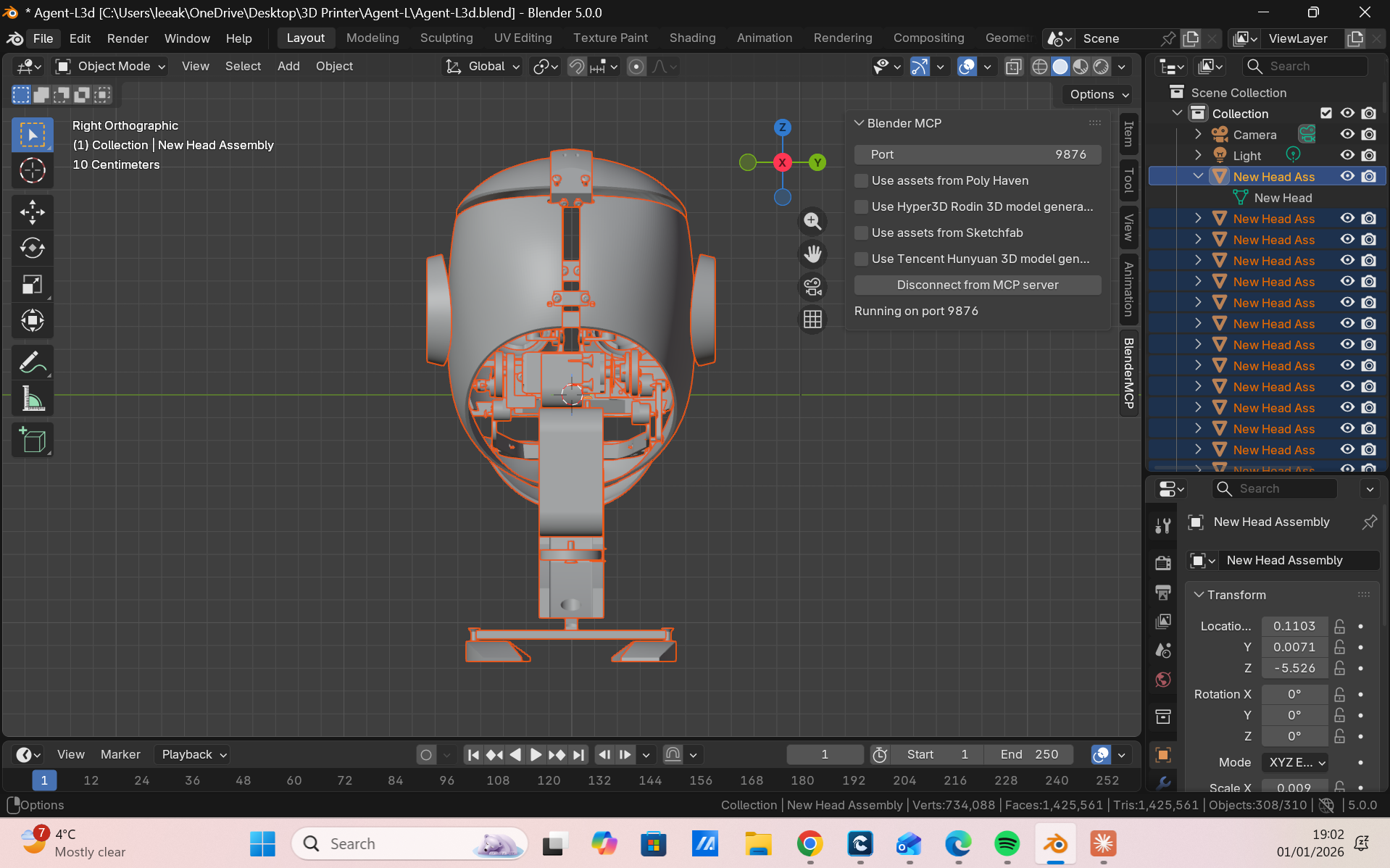

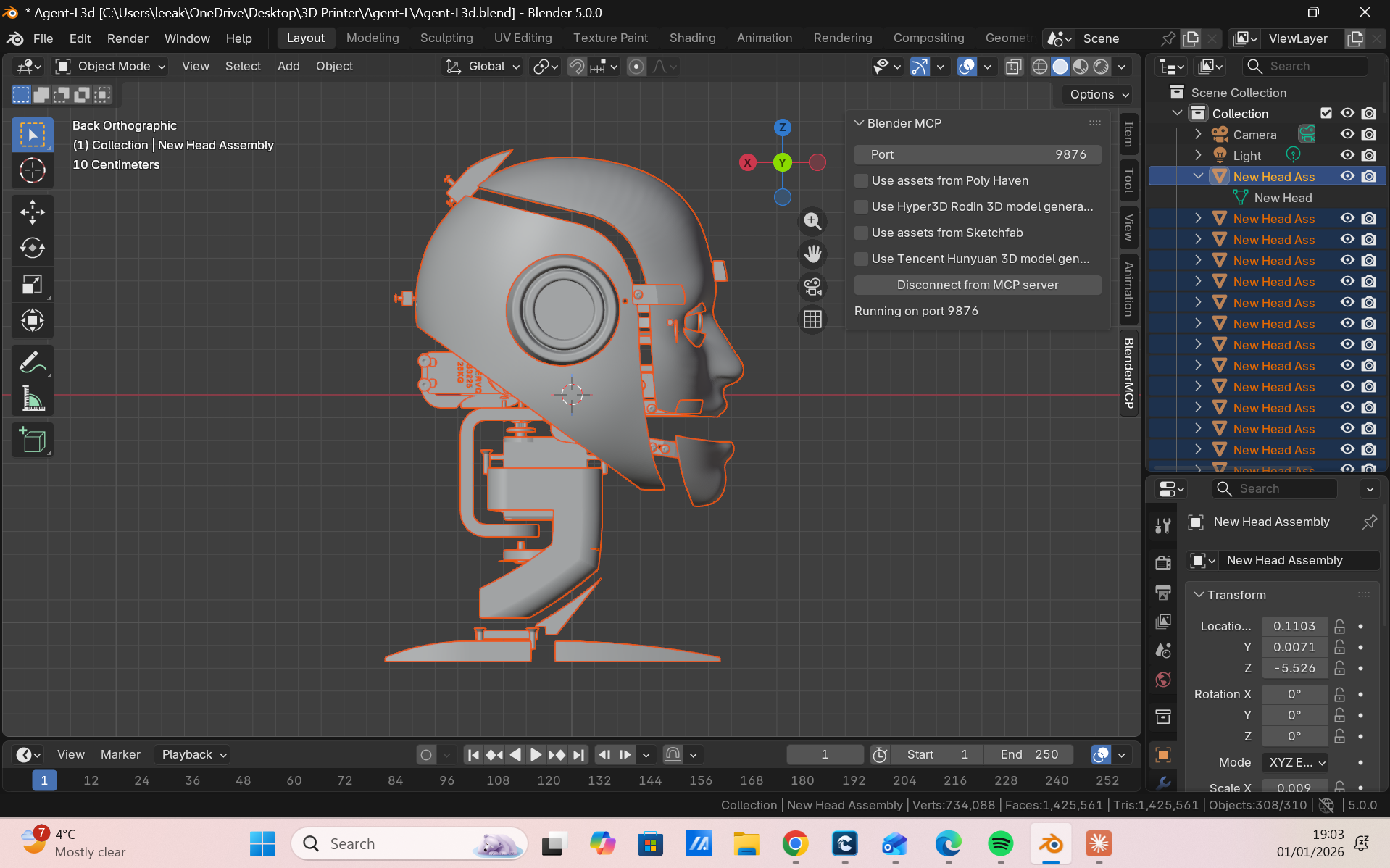

Blender

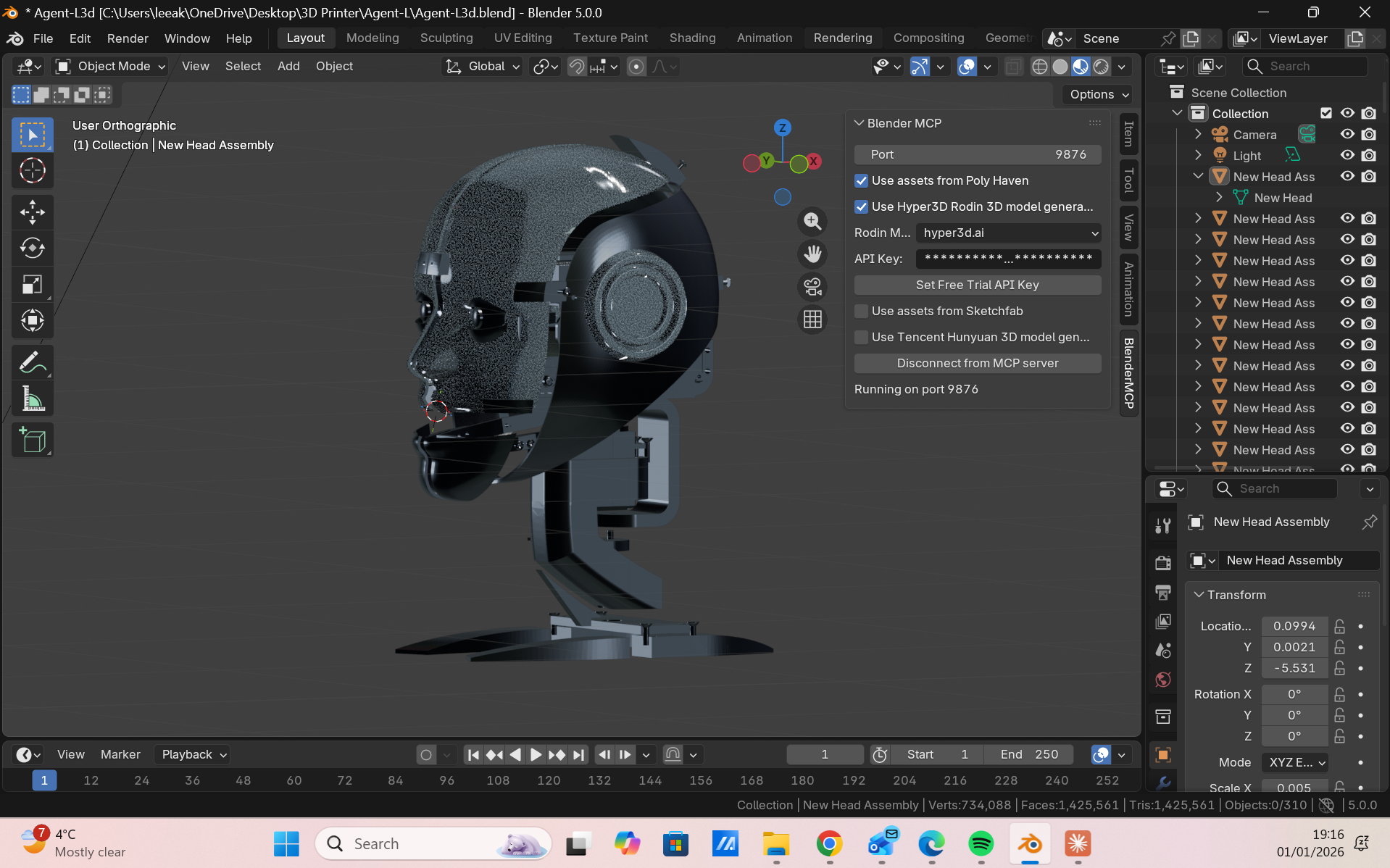

Front

Back

Side

Architecture

Edge

OpenAI

Real-time

Intelligence

Understanding

Voice

Expression

Lifelike facial mechanics driven by synchronized servos.

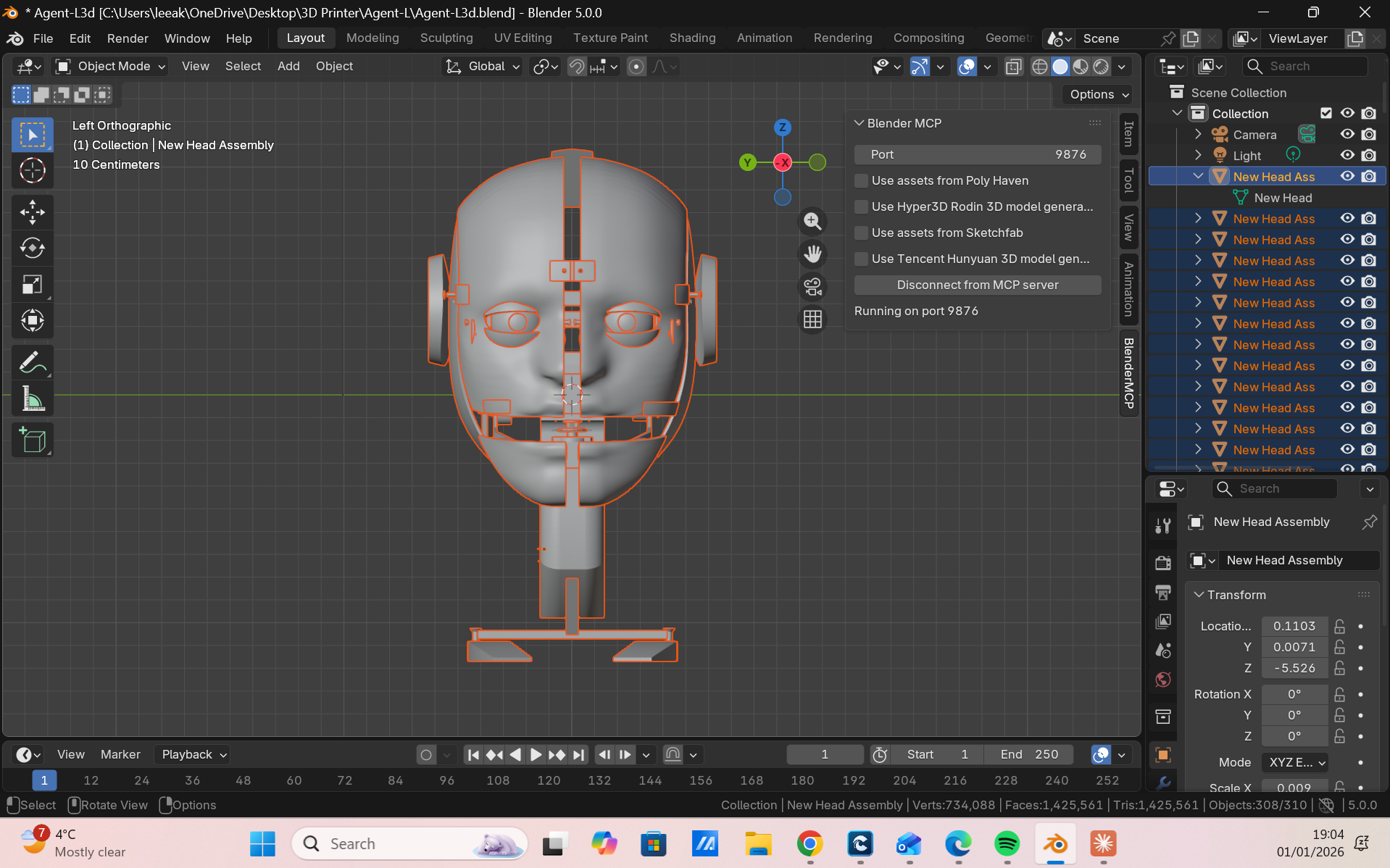

Front

Back

Side